AMD isn’t wasting time playing catch-up in the AI space. At its “Advancing AI 2025” event, the company laid out a very specific goal: become a serious alternative to Nvidia in powering the next wave of artificial intelligence. Not by copying what Nvidia is doing, but by doubling down on open systems, better price-performance, and a developer-friendly ecosystem.

And if you think this is just about faster GPUs, it’s not. AMD’s trying to reframe the whole conversation.

The MI350 GPUs Are AMD’s Next AI Play

The highlight was the new MI350 Series, AMD’s next-generation AI accelerators. These chips are built for massive AI workloads—think language models, data center AI training, and inferencing at scale. AMD claims a 4x performance boost over the previous generation and up to 35x faster inference. These numbers are impressive, but like always, we’ll have to wait and see what this looks like outside of AMD’s own benchmarks.

Still, the message is clear: this is AMD’s most aggressive attempt yet to challenge Nvidia’s stronghold in AI infrastructure.

One specific chip, the MI355X, is pitched as a standout in terms of value. AMD says it delivers up to 40% more “tokens per dollar”—a fancy way of saying it gives you more AI output for the money. In a world where AI model training can run up six- or seven-figure cloud bills, that kind of cost efficiency matters.

AMD Is Betting on Openness

But performance alone won’t unseat Nvidia. What AMD is really pushing is flexibility—something Nvidia’s locked-in CUDA ecosystem doesn’t always offer. AMD is going all-in on an open software stack with ROCm (now updated to version 7), making it easier for developers to work across platforms, tweak infrastructure, and avoid vendor lock-in.

AMD also showcased real-world deployments, including full rack-scale systems already running with Oracle Cloud and Meta. These aren’t just lab prototypes—they’re in production, powering workloads for major AI companies.

What Comes Next: MI400 and Helios

AMD didn’t stop at what’s coming now. It also teased the MI400 Series, due out in 2026, which will use a next-gen architecture and pair with its upcoming Zen 6–based EPYC CPUs. These systems, called “Helios,” are aimed at extreme scalability—delivering up to 10x the inference performance compared to what’s out today.

It’s AMD signaling that it’s not just making a single product push—it wants to own the future AI data center stack, from the chips to the infrastructure to the software.

Software Still Matters—A Lot

One of AMD’s historic weaknesses has been software, especially when compared to Nvidia’s CUDA ecosystem, which is deeply entrenched and widely used. AMD knows it won’t gain developer trust overnight, but ROCm 7 is its best attempt yet to close that gap.

Support for popular frameworks like PyTorch and TensorFlow has improved. Developer tools are getting better. The goal? Make it easier for teams to start building on AMD instead of just defaulting to Nvidia.

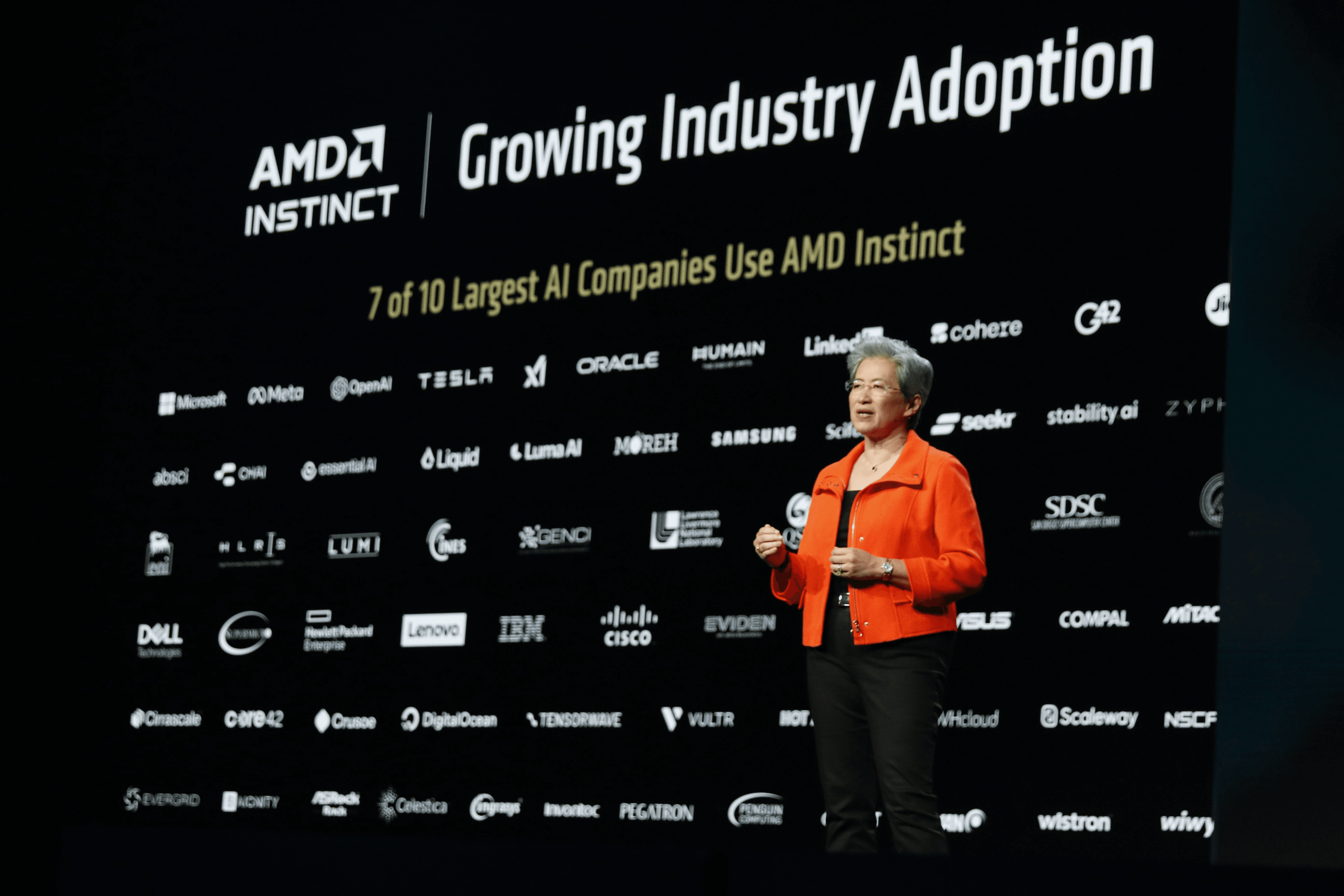

Major Partners Are Already In

AMD brought out a solid list of companies to back up the presentation. Meta is using MI300X GPUs to run Llama models. Microsoft and Oracle are integrating AMD chips into their cloud infrastructure. Even OpenAI is working with AMD to co-design future AI systems.

These are meaningful relationships—and a strong signal that AMD isn’t going it alone.

The Bottom Line

AMD’s AI event wasn’t flashy, and that might’ve been the point. This wasn’t about showmanship. It was about showing a strategy.

If you’re in the business of building or running AI models, AMD is trying to make the case that it’s the smarter, more flexible option. The challenge, of course, is that Nvidia’s ecosystem is already everywhere. But if AMD can deliver on performance, keep costs down, and make its software easier to use, the company may actually have a shot at rewriting how AI infrastructure is built.

Not bad for the underdog.